In this post you will discover the effect of the learning rate in gradient boosting and how to. Difference between Gradient descent and Normal equation.

The Ultimate Guide To Adaboost Random Forests And Xgboost By Julia Nikulski Towards Data Science

XGBoost solves the problem of overfitting by correcting complex models with regularization.

. Numpy Gradient - Descent Optimizer of Neural Networks. 21 Oct 20. Tree Constraints these includes number of trees tree depth number of nodes or number of leaves number of observations per split.

To cater this there four enhancements to basic gradient boosting. Gradient Descent in Linear Regression. PyQt5 QSpinBox - Getting descent of the font.

XGBoost also comes with an extra randomization parameter which reduces the correlation between the trees. Numpy Gradient - Descent Optimizer of Neural Networks. Gradient Descent in Linear Regression.

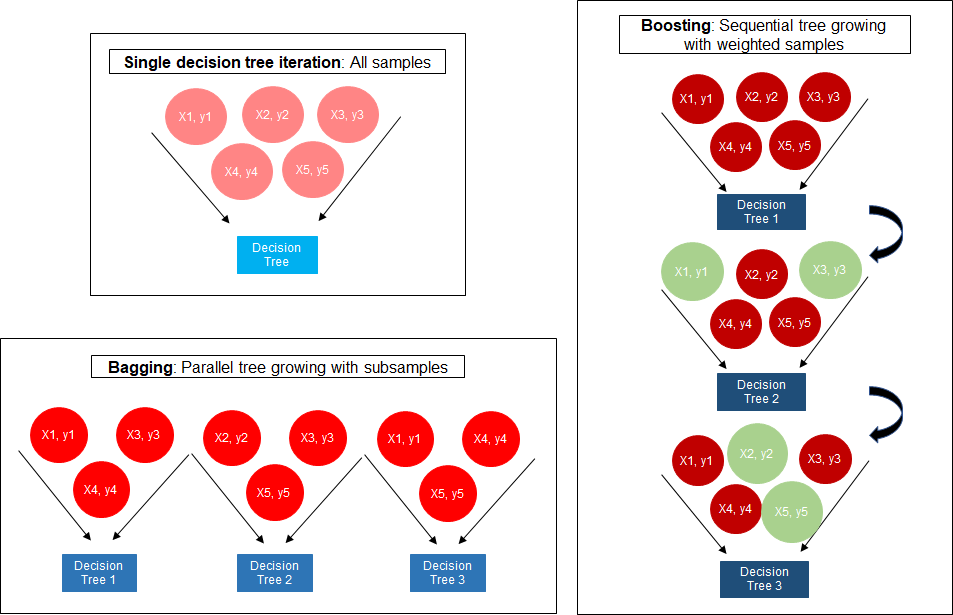

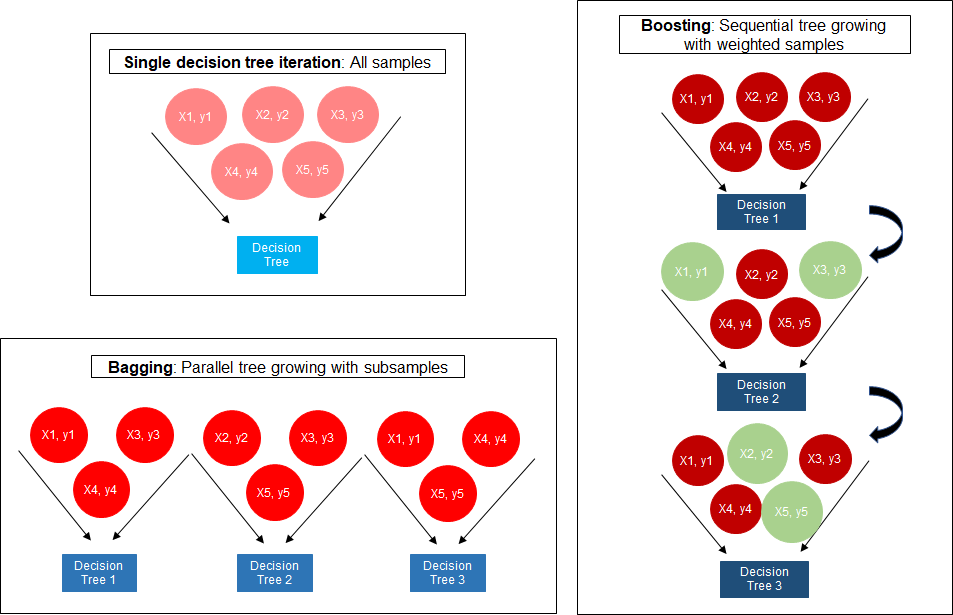

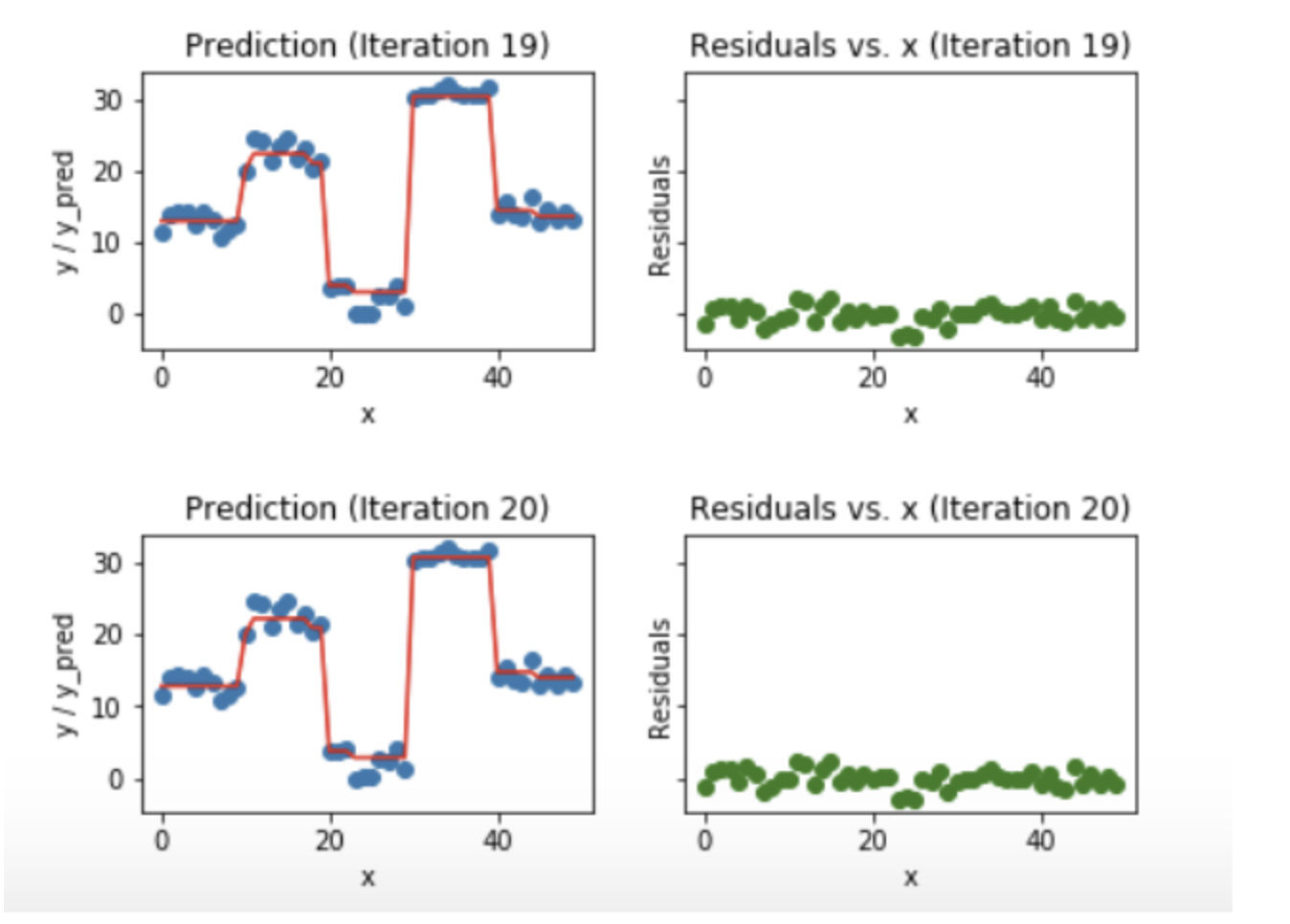

Difference between Gradient descent and Normal equation. A problem with gradient boosted decision trees is that they are quick to learn and overfit training data. Less correlation between classifier trees translates to better performance of the ensemble of classifiers.

A Scalable Tree Boosting. Under the hood gradient boosting is a greedy algorithm and can over-fit training datasets quickly. In this case X could be 3 1 1 1 18 6 6 6 or somewhere between 3 and 18 since the highest number of a die is 6 and the lowest.

One effective way to slow down learning in the gradient boosting model is to use a learning rate also called shrinkage or eta in XGBoost documentation. Extreme Gradient Boosting or XGBoost for short is an efficient open-source implementation of the gradient boosting algorithm. How to find Gradient of a Function using Python.

How to implement a gradient descent in Python to find a local minimum. ML T-distributed Stochastic Neighbor. It was initially developed by Tianqi Chen and was described by Chen and Carlos Guestrin in their 2016 paper titled XGBoost.

Enhancements to Basic Gradient Boosting. Vectorization Of Gradient Descent. Vectorization Of Gradient Descent.

Regularized Gradient Boosting is also an. As such XGBoost is an algorithm an open-source project and a Python library. 12 Mar 21.

Gradient Boosting And Xgboost Hackernoon

Gradient Boosting And Xgboost Hackernoon

Gradient Boosting And Xgboost Note This Post Was Originally By Gabriel Tseng Medium

Xgboost Versus Random Forest This Article Explores The Superiority By Aman Gupta Geek Culture Medium

Boosting Algorithm Adaboost And Xgboost

Catboost Vs Light Gbm Vs Xgboost By Alvira Swalin Towards Data Science

Gradient Boosting And Xgboost Open Data Science

The Intuition Behind Gradient Boosting Xgboost By Bobby Tan Liang Wei Towards Data Science

0 comments

Post a Comment